Hopefully you just read the first part of this guide and are ready for implementing code on your virtual machine in order to schedule daily data imports to BigQuery.

Authorizing access to applications

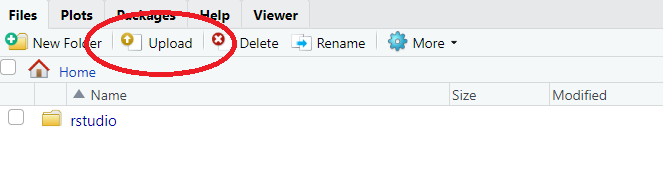

First off we need to upload our JSON file to the machine. Simply click on "upload" in the file box in the right corner of the screen and choose your JSON file.

Now add a new R script file to work with by clicking on the icon in the navigation bar or by pressing Ctrl+Shift+Alt+N. Then call for the libraries we need to use. All of them are pre installed on the machine.

library(googleAnalyticsR) library(googleAuthR) library(bigQueryR) library(searchConsoleR)

Now make sure you set the correct scopes and authorize the connection from your machine to your applications. You do this by authorizing with your JSON file.

options(googleAuthR.scopes.selected =c("https://www.googleapis.com/auth/analytics.readonly",

"https://www.googleapis.com/auth/bigquery",

"https://www.googleapis.com/auth/cloud-platform",

"https://www.googleapis.com/auth/webmasters"))

Sys.setenv(GAR_AUTH_FILE = "my_auth_file.json")

Sys.setenv(BQ_AUTH_FILE = "my_auth_file.json")

Sys.setenv(GA_AUTH_FILE = "my_auth_file.json")

Sys.setenv(SC_AUTH_FILE = "my_auth_file.json")

googleAuthR::gar_auth_service(json_file = "my_auth_file.json",

scope = getOption("googleAuthR.scopes.selected"))

Here we pick our scopes, set the environment variables to check the JSON file and then authorize the connections with gar_auth_service(). Let's set our global project and dataset for BigQuery as well.

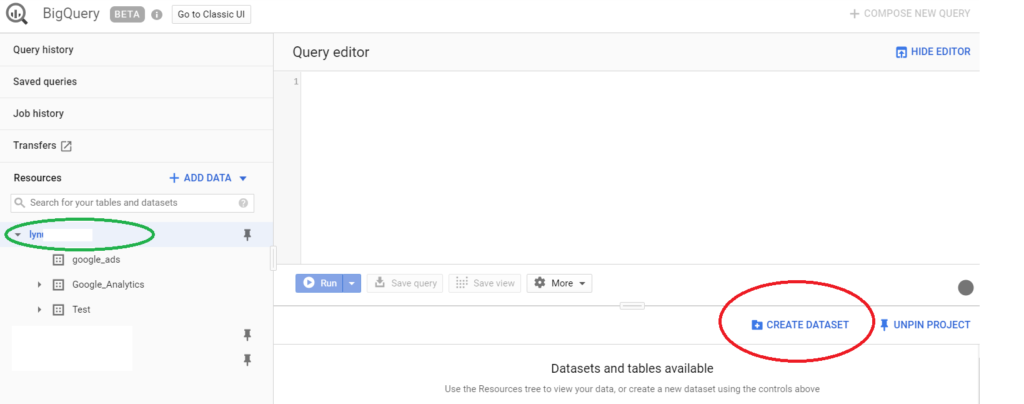

If you haven't worked with BigQuery previously then you'll have to create a dataset before you can set the global variables. Just head to https://console.cloud.google.com/bigquery, click on your project name (marked in green below) and then click on "Create dataset" (marked in red below). If you're living in the EU you HAVE TO pick a data location within the EU area.

Now we can set the global variables in the R script.

bqr_global_project("project-id")

bqr_global_dataset("dataset_id")

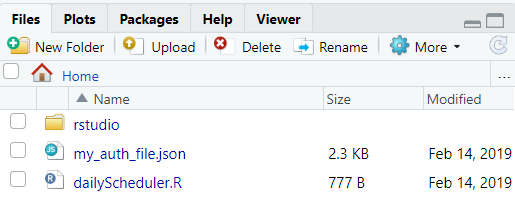

Save the file as "dailyScheduler.R" and put it in your home folder. It should look like this after you have saved it.

Writing the import functions - Google Analytics

Create a new R script file (Ctrl+Shift+Alt+N). What I'm gonna show you is how you can access a lot of valuable data and structuring it in a way that looks a little bit like the Google Analytics 360 integration with BigQuery. To do this though, you will have to use some custom dimensions in your GA property. You can of course skip it if you don't feel like you need them but I sincerely advice you to implement them. Simo Ahava wrote an excellent guide for how to implement it. What you need is IDs for users (client) and visits. You will also need a timestamp variable. All of it can be found at Simo's blog.

The function we will create will use three parameters; "id" for the Google Analytics View ID and dates for the period to collect data within. Let's dive into the function's code!

ga_import_traffic <- function(id, start, end){...}

dimensions <- c("dimension11", #clientId

"dimension12", #sessionId

"dimension13", #timestamp

"date",

"sourceMedium",

"campaign",

"adContent",

"keyword",

"adDistributionNetwork")

We start off by picking the dimensions we want to collect from Google Analytics. Here you can see the custom dimensions I was talking about earlier. This data will contain session level data for the user and the traffic related dimensions. We only want one single row per sessions and since timestamp is a hit level custom dimension we need to be a little bit creative.

We need to import some metric only to be able to use the Google Analytics API, don't mind why we want sessions if each row should be session based. We will remove that later. The interesting part here is that we are filtering out all results where "entrances" are greater than 0. That means that we will only get data from the first page view for a specific session, hence one row for each session in the output.

metrics <- c("sessions")

mfil <- met_filter("entrances", "GREATER_THAN",0,not = FALSE)

mfc <- filter_clause_ga4(list(mfil), "AND")

Now we will collect the data and then remove the "session" column. We will also rename our columns in order to get something that can be used easily in BigQuery.

ga <- google_analytics(id,

date_range = c(start,end),

dimensions = dimensions,

metrics = metrics,

met_filters = mfc)

ga <- ga[1:9]

colnames(ga) <- c("fullVisitorId",

"visitId",

"visitStartTime",

"date",

"source_sourceMedium",

"source_campaign",

"source_adContent",

"source_keyword",

"source_adDistributionNetwork")

The next part is a simple way to restructure the data. We want the "fullVisitorId" to be treated as a string in BigQuery and to prevent it from being converted to an integer we add "id_" before the numbers. We also change the format of the timestamp dimension to instead be converted to seconds, just like in the GA360 integration with BigQuery.

ga$fullVisitorId <- paste0("id_",gsub("\\.","",as.character(ga$fullVisitorId)))

t <- paste0(substr(ga$visitStartTime, 1, 10)," ", substr(ga$visitStartTime, 12,19))

t <- as.POSIXlt(t, origin = "%Y-%m-%d %H:%M:%S")

ga$visitStartTime <- as.integer(as.numeric(t, units="secs"))

return(ga)

That's the first function! But we want to have more data from Analytics, don't we? I'll show you two more import functions that I'm using. They are quite similar to the one above so I won't break them down for you.

ga_import_tech <- function(id, start, end){

dimensions <- c("dimension12", #sessionId

"deviceCategory",

"browser",

"browserVersion",

"operatingSystem",

"operatingSystemVersion")

metrics <- c("sessions")

mfil <- met_filter("entrances", "GREATER_THAN",0,not = FALSE)

mfc <- filter_clause_ga4(list(mfil), "AND")

ga <- google_analytics(id,

date_range = c(start,end),

dimensions = dimensions,

metrics = metrics,

met_filters = mfc)

ga <- ga[1:6]

colnames(ga) <- c("visitId",

"device_deviceCategory",

"device_browser",

"device_browserVersion",

"device_operatingSystem",

"device_operatingSystemVersion")

return(ga)

}

ga_import_geo <- function(id, start, end){

dimensions <- c("dimension12", #sessionId

"country",

"continent",

"subContinent",

"region",

"city",

"language",

"networkDomain")

metrics <- c("sessions")

mfil <- met_filter("entrances", "GREATER_THAN",0,not = FALSE)

mfc <- filter_clause_ga4(list(mfil), "AND")

ga <- google_analytics(id,

date_range = c(start,end),

dimensions = dimensions,

metrics = metrics,

met_filters = mfc)

ga <- ga[1:8]

colnames(ga) <- c("visitId", #sessionId

"geo_country",

"geo_continent",

"geo_subContinent",

"geo_region",

"geo_city",

"geo_language",

"geo_networkDomain")

return(ga)

}

Combining the GA data

Now here comes the interesting part. Did you notice that all functions used "sessionId" as a dimension? That is because now we can use that to merge all data on that key. The following function is the actual function you will call from the "dailyScheduler" script.

upload_ga <- function(id, start, end){

tryCatch({

traffic <- ga_import_traffic(id, start, end)

tech <- ga_import_tech(id, start, end)

geo <- ga_import_geo(id, start, end)

ga <- merge(traffic, tech, by = "visitId", all.x = TRUE)

ga <- merge(ga, geo, by = "visitId", all.x = TRUE)

job <- bqr_upload_data(tableId = "ga_sessions", upload_data = ga, create = "CREATE_IF_NEEDED")

print("Job for uploading data to BigQuery table ga_sessions ran successfully!")

}, error = function(err){

print("Error occured when trying to upload data to BigQuery table ga_sessions!")

#sendEmail(subject = "BigQuery upload error",

#message = "An error occured while trying to upload data from RStudio Server to BigQuery table ga_sesions!",

#to = "first.last@lynuhs.com")

})

}

As you can see above, the first thing we do in the script is to import the data by using the previous functions and then merge all the data by "visitId". Then we upload the data to BigQuery with the table name "ga_sessions". If the table doesn't exist (on first run it won't) then we will create it. A side note is that if you want to you can use bqr_create_table() to make the table partitioned by date. Just type an IF statement for checking if the table exists instead.

If the function runs successfully we print that out in the log, and otherwise we print out that there was an error. As you can see I have commented a line that will send an email to a chosen email address if there's an error. I'll show you how to activate it in the bottom of this post.

In my own scheduled machine I use even more Google Analytics data and then send it to different tables (ga_pages, ga_events etc). I use "visitId" as a way to connect the different tables in my queries in BigQuery. I won't show you all of those other tables but maybe that could be a good practise for you to try on your own? I'd recommend to start with the pages table. A hint is that some page dimensions might not look the same all the time, maybe title will change or maybe some dimensions will be NA/NULL. Think about what you need to include with the "visitId" and then how you could add those other page dimensions to it after.

Writing the import functions - Google Search Console

Before I'm gonna show you how to schedule the machine I'll give you the function for importing data from Google Search Console as well. This is super easy and I think you can figure out the entire functionality of it on your own.

upload_sc_queries <- function(site, start, end){

tryCatch({

search <- search_analytics(siteURL = site,

startDate = start,

endDate = end,

dimensions = c("date","query", "country","device"),

searchType = "web",

walk_data = "byDate")

if(all(!is.na(search$date))){

job <- bqr_upload_data(tableId = "sc_queries", upload_data = search, create = "CREATE_IF_NEEDED")

print("Job for uploading data to BigQuery table sc_queries ran successfully!")

}

}, error = function(err){

print("Error occured when trying to upload data to BigQuery table sc_queries!")

#sendEmail(subject = "BigQuery upload error",

#message = "An error occured while trying to upload data from RStudio Server to BigQuery table sc_queries!",

#to = "first.last@lynuhs.com")

})

}

Now make sure to save this file. Choose to create a new folder and name it "R". Then save the file as "schedulingFunctions.R" in that folder. This is simply to keep the environment clean.

Now open up your main script again. We have to make some changes for the actual scheduling functionality. Directly under the lines for importing libraries you will call for the function script you just created. This will run all the code in that file, i.e. importing all functions.

source("R/schedulingFunctions.R")

Then at the bottom of the script you will add the following lines:

upload_ga(your_ga_id, Sys.Date()-1, Sys.Date()-1)

upload_sc_queries("https://www.domain.com/", Sys.Date()-4, Sys.Date()-4)

Now the script is ready to be scheduled. Exciting, isn't it? By the way, the reason that Google Analytics will import data from yesterday but Search Console will only get it from 4 days ago is that Search console has a longer time lag for collecting data. We can't access the previous three days data.

Scheduling the script

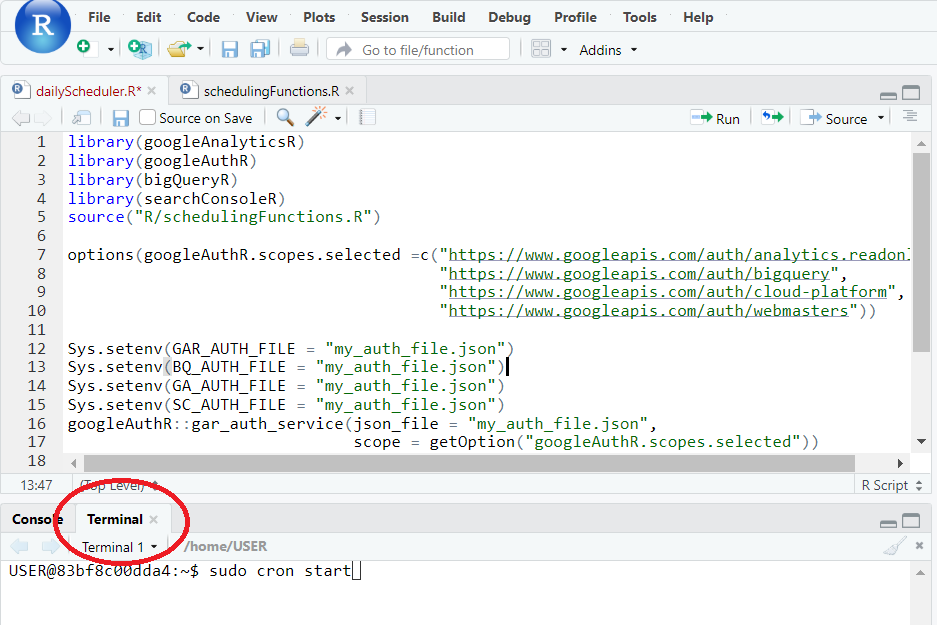

First things first. We will need to start up the Cron in order to use the schedule functions. We do this by clicking on "Terminal", marked in red in the image below. And there we type "sudo cron start" and press enter.

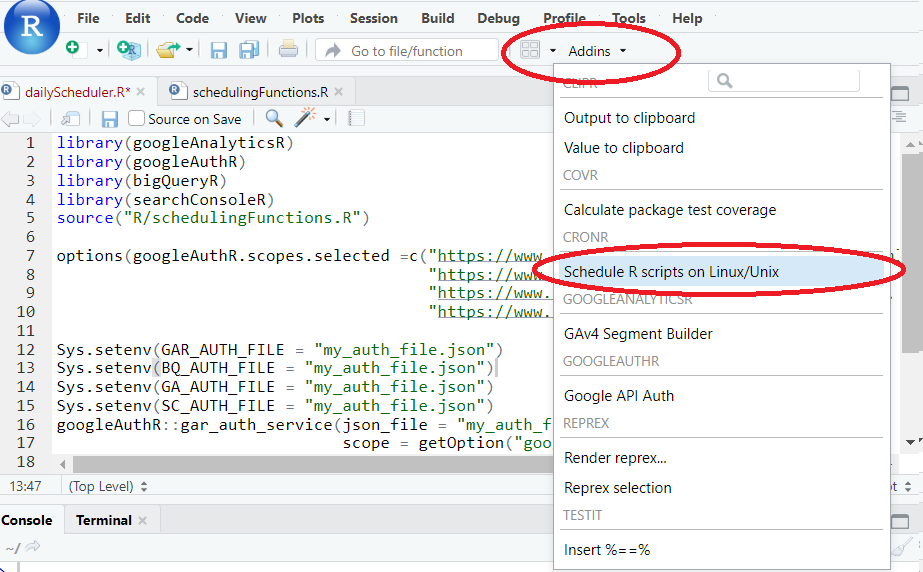

Now that your Cron is running, you can click on "Addins" in the navigation above and then choose "Schedule R scripts...".

You will probably get a question if you want to install the packages. Just click yes.

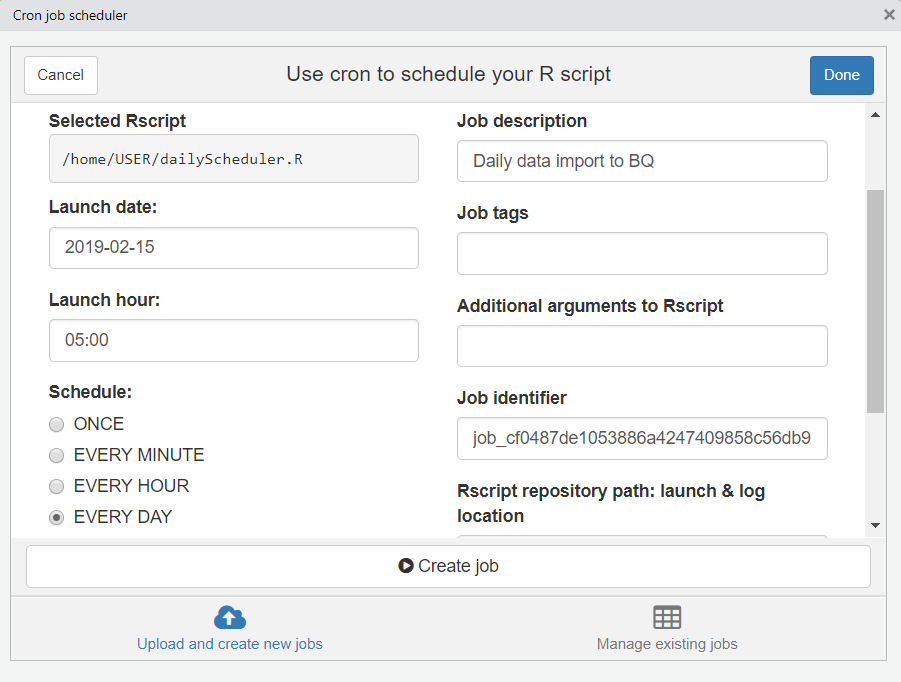

After the installation you should see the Cron job scheduler. There you click on "Select file" and choose "dailyScheduler.R". Choose a job description, pick a launch date and a time for when the script should run. Then you pick how often the script should run.

Now all you have to do is click on "Create job" and your scheduler is set up! Congratulations, you will now save a lot of time in the future!

And as I promised. Here's how you activate the function for sending an email upon errors. Before you can use it you will have to type in gmailr::gmail_auth() in the console in order to authorize a gmail account to be used with RStudio. This will then be stored in a httr-oauth file on your machine.

sendEmail <- function(subject, message, to="first.last@lynuhs.com"){

gmailr::gmail_auth()

daily_email <- mime(

To = to,

From = "EMAIL", # the email you used when authorizing gmailr.

Subject = subject,

body = message)

send_message(daily_email)

}

Hope you enjoyed these posts and that you got this up and running! Feel free to get in touch or comment below if you have any questions!

storme

2019-04-10 09:00

Nice post,

Do we need our PC to be online on execution time to keep the schedule running, or it will keep running even our PC is offline?

Linus

2019-04-11 09:33

Hi, if you are running the script on a local machine, then yes, it will have to be online. If you are using a virtual machine in Google Cloud then it is all based in the cloud, meaning it doesn’t matter what you do with your computer. Does that answer your question?